Gender Classifier

This blog is not a tutorial but rather a journal of updates and rants I wrote on Facebook while working on a coding project during my vacation in 2017.

It is nostalgic to read every single log and remember what was going through my head. Those memories of me clicking through my keyboard — building things that mattered to me, complaining about technical difficulties that I could not solve -- those days were the foundation of who I am today. I will try to provide as much context as I can through texts and images in these logs.

This project is about real-time Gender classification using Keras and python -- I am trying to teach a computer how to tell if a person in an image is male or female. This is really a sex classifier because it can only do male/female classification. However, Gender is a social construct consisting of more than just male and female. At the time, I did not have any clue how to classify other genders based on facial structures so there's only male and female in this one.

Hopefully, this animation can show how the classifier works.

I was quite intrigued by CNN (Convolutional Neural Networks) and was playing around with projects and tutorials related to it. I had been following a tutorial for Cats and Dogs Classification which was pretty simple and very boring so, I decided to build a classifier of my own. Having no prior experience of building something like this, I was definitely in for a show here.

A SAMPLE OF THE CODE OUTPUT: (GITHUB LINK)

4th Oct 2017

I had been following a food classification tutorial where I had to download hundreds of images of Pasta and Hotdogs which was quite boring -- so I never finished it.

I guess scraping the web for thousands of images of food is the worst job one can have -- now I know why Jin Yang went crazy in Silicon Valley. In my case, I tried to solve the problem by writing a python script -- it sends requests to check the validity of the images on the webserver. But the main issue is the maximum amount of requests a server can handle. If it cannot process the requests then it returns nothing which breaks my script completely. I will try to find a better and much simpler solution for this issue.

I guess my vacation is going great and the best part of it is that I'm able to do projects that I really wanted to do.

An Image Classifier that can classify whether the animal in an image is a Dog or a Cat (Keras-Cats-and-Dogs); It was really fun to work on it. I never realized that a code that was on my computer made my work easier than ever while I was searching the whole Internet for a better solution. I still laugh when I remember the time when I was going through my old code and found 1000 lines of code for a classifier that classifies between Human, horses, cats, and dogs.

The best part of it was that the project already had a large set of training images -- no need to scrape the web anymore. But the worst part was the messy code. Literally, a simple line of code for reshaping an image was overcomplicated to 30 lines of jargon. I went through the entire code and got rid of all the unnecessary code -- pretty much half of the code was gone.

6th Oct 2017

I want to do a project on Deep Learning about Gender Classification through images but I haven't found any pre-existing work on it. I assume that it has not had much success so, People haven't done it. But I want to have a shot at it. Yesterday, I learned about Face Detection using haarcascades -- it is an open-sourced low computation heavy object detector made by a few developers -- awesome people.

I have an idea to use this detector in python to detect the faces of people and crop them out, specify the male and female faces manually to build a dataset, and train a neural network. I don't know if this is gonna work but I am excited about it.

I have already done one project about image classification of Cats and Dogs -- It was great. I love that project. However, this one is not about body shapes. It is about the facial structures of male and female which is quite harder to learn. But since male and female have significant visual differences, I hope the neural net can identify the features on it own and learn it. I am very excited about this.

Issue #1 -- A new Training Dataset

The images of males and females that I have locally are of low quality and the Haarcascade detector cannot identify the faces. So I have to find another dataset of Images of humans and Classify them manually. This might take some time. Might also have to find a more accurate Face Detection Algorithm.

Issue #2 -- Grunt Work

I've found a lot of images on Image-net.org but the problem is that they need an email of an Organization to download the dataset and since I am not a part of any organization, I cannot get to download it but they allow us to download the URLs and manually visit and download the images but it will take very long if I do it and I don't want to do that boring work. So, I am gonna reuse a python script that automatically reads the file containing URLs and downloads every single one of them.

Now, the script does work but the problem I am facing right now is the issue with the server that it sends the request. The script crashes when the server gets a maximum amount of requests or when the connection time is up. It annoyed me this morning when I went for a run; the script had crashed within 2 minutes. I tried a lot of research but I still cannot solve this problem. The one answer that shows a bit of promise is the try and except statement and I am hoping that I can solve this problem and get the images without any issue.

I guess I have fixed the issue but I am still unsure about it. I will explain it this morning. Good night!

7th Oct 2017

About 200 images have been downloaded. perfect.

500 images within 2 hours; The script is working perfectly. I think the Tumblr website is stable and the download request is accepted but there is always a condition where the image is removed and the script crashes but I've already figured out a solution to that using the requests module in python and check whether the image is available or not. Love this python script. I want to improve it to do more better but when the time comes, I will probably do the work.

The downloading of all the images of the male is completed. Jesus Christ! 833 images within 2.5 hrs. That's cool. Now it's time for female images. I don't know how long it is gonna take to fix the URLs before the script can start its work but I am really happy that the images are download and I didn't do much hard work.

There is still another future issue. The haarcascade library cannot detect the faces of some images that I've downloaded.

I think the solution for this problem is the Face Detection API by google and I think I will give it a shot but the thing that is bugging me is the Google Cloud Platform which is not free to use the API. I've found the python script of the API in GitHub and I will try to use it to detect the faces of some images. Fingers crossed!

The Face Detection API by Google is written in Tensorflow which is a pretty complex framework and I don't like it that much that's why I use Keras as the framework is easy, user-friendly, and uses Tensorflow in its backend. So, I am indirectly using TensorFlow but efficiently. I know that the code is going to be written straight for Tensorflow but I will try to understand it.

8th Oct 2017

It's getting harder day by day.

Issue #2: FIXED

The issue that I was having which was about the detector has now been resolved. Thanks to the dlib library in python. It uses machine learning to identify the images and I am surprised at the accuracy of the detection compared to the haarcascade detector -- takes care of all the angles.

Issue #3 -- I do not know

A new issue has arrived -- my computer is slow AF. Although I do have an AMD GPU in it but since I have been using Ubuntu 16.10 for the last few years -- which does not provide any driver support for AMD GPUs -- it is very very slow compared to windows. This means my computer can crash anytime during training or worse -- while loading the images before starting the training.

Now I can do one thing -- instead of loading all the images and processing them before initiating training, I can save a preprocessed array of images beforehand and use that to train the model. Hopefully saving me from restarting my computer every 5 minutes.

But first thing first, I will back up whatever is left here. Probably upload all the work to GitHub. Previously, I had lost all of my data while I was trying to dual-boot Windows and Ubuntu -- what an idiot I was trying to do it on my own instead of following a YouTube tutorial.

Holy Sh*t! This idea of saving the NumPy array nearly crashed my computer and the data that it managed to save was just 848.2 MB. What! Can you imagine loading this much data at once will not only take more time but more memory as well? I've got to figure out something else.

But I am gonna try to load the array. Hopefully, it does not crash.

15th Oct 2017

That idea can work but the data has to be distributed to load properly and It might be a bit risky but wait! I can do that same job but with one set of images at a time. I think I should load the male dataset and extract all the cropped array and save it and load the female dataset and extract all the cropped array and save it as well and merge it? Can I even do that? Sure.

And by the way, The NumPy array I was loading nearly -- very nearly maxed out my memory. Thankfully I was able to cancel the build process. It requires huge RAM to do this -- 4GB ain't gonna cut it.

Yup! The Model crashed the computer. Now I have to install Keras and TensorFlow in windows 10 to run the GPU at its maximum capacity and overclock it if required. Not the kind of progress I was hoping to make. Just want this model to get trained so that I can add more models for future purpose.

16th Oct 2017

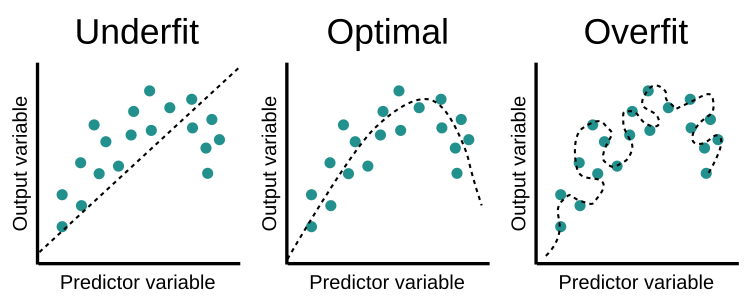

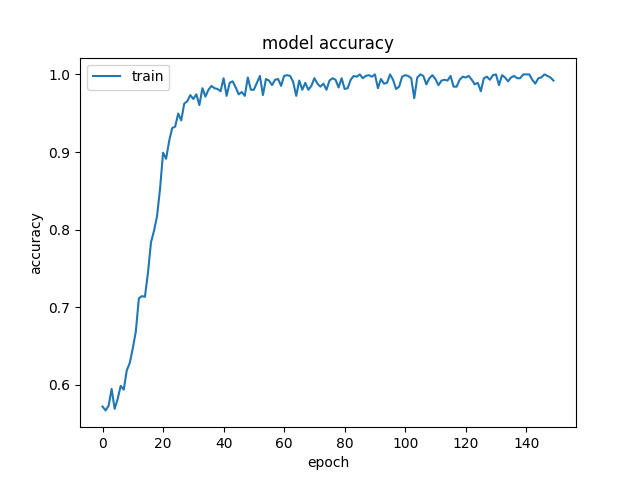

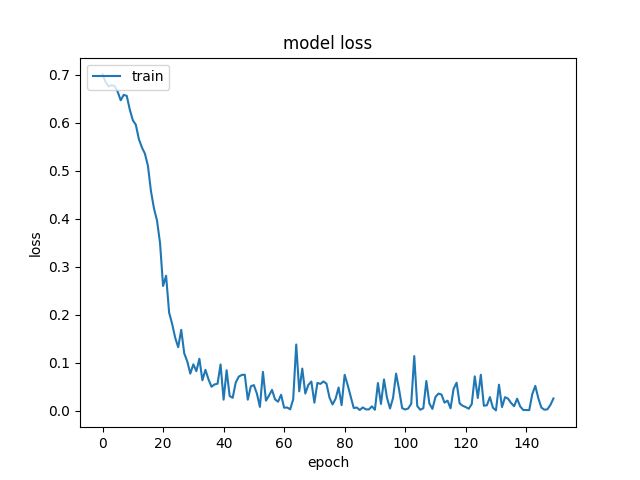

Started training the model but the accuracy did not increase -- it plateaued. This is what I am always scared of while training a neural network -- the solution is either altering the neural network layers or increasing the training time which might lead to overfitting. Overfitting is the worst thing that can happen here. I will come back later.

17th Oct 2017

The idea of creating a NumPy (.npy) file while generating the data is not gonna work because the data doesn't get concatenated but rather gets overridden. So I have to make a pre-existing data file and concatenate the data into it. I will come back later.

The training was pretty good! To be honest, It was just okay -- 20 epochs is not good. Today, I will try for 100 - 200 epochs and see how it goes.

The issue of saving the new image data is solved now. Hurray!

This gap in my blog entry is because I did other things during the vacation and by November, the school had started so probably got busy with school work.

17th Nov 2017

I just lost everything!

I have been using Ubuntu 16.10 for the last few years. It is not the most reliable OS but what can you expect from Linux -- they are work in progress kind of OS. But a few days ago, I saw an update to 17.10 which showed pretty good design changes and I thought sure, let's update it. Didn't realize it was a beta. Tried the installation and it failed -- and corrupted the bootloader. It means I cannot boot into ubuntu or windows. Maybe the only solution is to reinstall everything.

thank me that I had uploaded a copy of my projects to GitHub but the training data is gone now! But the copy on GitHub is not the latest copy -- it is an old copy which means I have lost all the new code that I had written. I know how I wrote it so might not be that hard to rewrite it but this is annoying.

23rd Nov 2017

Alright back to 16.10 again. Everything is fine except for OpenCV which is a library for image processing -- It has failed every time I tried to install it.

UPDATE: I just fixed the OpenCV video issue on my computer. I feel relieved now!

24th Nov 2017

I'm stuck in a problem that I had solved a month ago. Switching to 17.10 was the biggest mistake of my life. I wish I could go back in past and prevent myself from doing that.

I did not write as frequently as before -- got busy with school -- and probably irritated by the bugs. But I "persevered" with fixing some of them.

Now the accuracy of the classifier was bad. Sometimes, it would mistakenly classify me as a female. The solution was getting more data. So here comes the idea of getting new training datasets:

What do convicted Prisoners and an Image Classifier have in common?

Their mugshots are really good training -- even better when they have side profiles.

This takes a bit of a dark turn but looking back it is hilarious that this was my solution. They can be good training data if one can find a huge collection of such images. But it comes with the grunt work of labeling -- so I would have to manually cut and paste images from one folder to another folder.

So I did it; downloaded as many images as I could. Used the dlib program to extract all the faces and manually labeled all the data. It was very exhausting but the chase for improvement drove me along with hour-long dubstep music blaring in the background -- my taste in music was never good.

So after completing the grunt work — I started the training. But this time, I wanted to train it 24/7 — to the point of overfitting the model.

1st Dec 2017

The Classifier works like an angel after overtraining it for a week on a small dataset -- perfect. As long as it does not cause any trouble, I am okay with it being overfitted here.

So the last few days were spent trying to finish my homework for the most part. I gave only a few hours for this project every day but I think it was enough to complete it -- this also gave me a lot of time to think about the improvements during boring classes. Somedays I would be excited to come back home to code the solution. Other days, I would bang my head on the keyboard for unsuccessful attempts -- metaphorically.

At that time, the classifier worked using a camera feed -- the idea has always been to use the camera but I suppose one can fiddle around the code to make it functional with video files as well.

There was one issue of FPS that made the video output very jittery -- this was due to my bad coding practice. But other than that, it was nearly perfect.

20th Dec 2017

I'm done with Image Classification. I have finished this project of Gender Classification. I thought it is impossible to fix the Low FPS issue with code optimization but I did it. I am happy with the Classifier.

But when I showed it to the teachers at school, while some were impressed (non-STEM teacher), others expected it to be more reliable and classify more than just humans. I think it was a fair suggestion but I won't do that for now. But it was hilarious to walk around the school with a laptop looking like an idiot and classify other classmates. I still remember the fun we had in the border's room -- did not expect a piece of garbage code could bring so much joy. I wish I had recorded all of that -- but got too caught up in the moment.

The code is available on GitHub. If anyone wants to try it — be my guest but heads up for a dreadful bug due to the recent changes in Keras library -- I have not bothered fixing it. The last time I used it was for creating sample videos for a talk on Artificial Intelligence in my school — it did its job. The AI is good; it works on everyone, maybe not so well on younger people since it never got trained on their data. But it rarely *coughs* makes mistakes like this:

I know this is a very long and boring blog. I will write something better next time but for now, this is what I did back then and still do -- and I get to relive it once again here and who wouldn't want that.